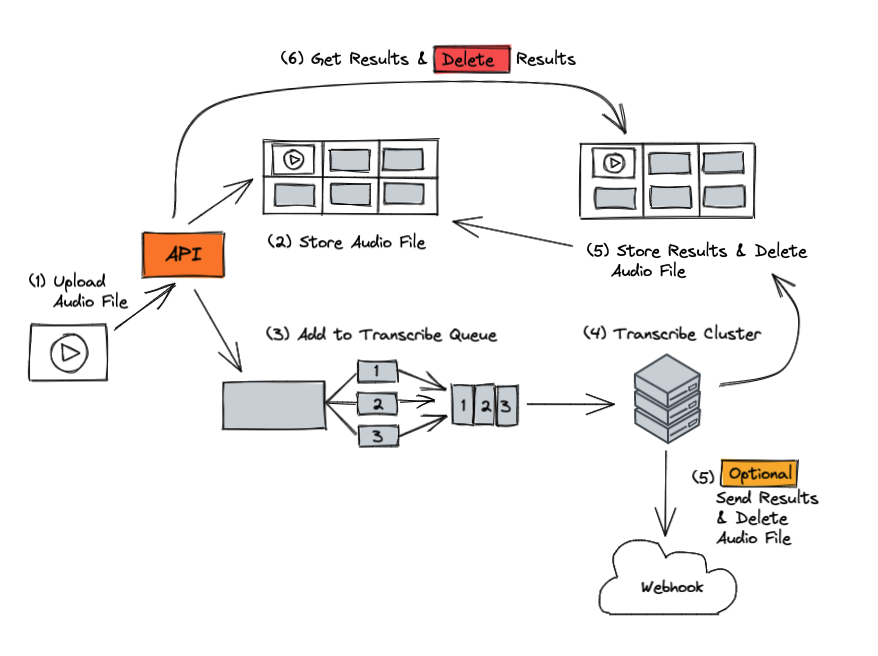

The Virlow API can transcribe audio/video files. You will need to include the audio/video file in your request to the API. Below is a diagram of the Fala Voice transcription workflow.

To transcribe your audio/video files, (1) you need to submit your file, and (5) receive the transcript at your defined web-hook or (6) retrieve the transcript.

Single Channel Request

In the code snippets below, we show how to submit your audio/video file to the API for transcription. After submitting your POST request, you will get a response that includes an id key, status message and any additional data you posted.

The status key shows the status of your transcription. It will start with "queued", and then go to "processing", and finally to "completed".

The id key is the unique id of your transcription job, which you can poll the API for when the job has been completed.

The code sample is for a single channel (mono), for this we pass a false value for the dual_channel key.

var axios = require('axios');

var FormData = require('form-data');

var fs = require('fs');

var data = new FormData();

data.append('audio', fs.createReadStream('audio.wav'));

data.append('dual_channel', 'false');

data.append('punctuate', 'true');

data.append('webhook_url', '');

data.append('speaker_diarization', 'false');

data.append('language', 'enUs');

data.append('short_hand_notes', 'true');

data.append('tldr', 'true');

data.append('custom', 'YOUR_VALUE');

var config = {

method: 'post',

url: 'https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY',

headers: {

...data.getHeaders()

},

data : data

};

axios(config)

.then(function (response) {

console.log(JSON.stringify(response.data));

})

.catch(function (error) {

console.log(error);

});

Unirest.setTimeouts(0, 0);

HttpResponse<String> response = Unirest.post("https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY")

.field("file", new File("audio.wav"))

.field("dual_channel", "false")

.field("punctuate", "true")

.field("webhook_url", "")

.field("speaker_diarization", "false")

.field("language", "enUs")

.field("custom", "YOUR_VALUE")

.asString();

import requests

url = "https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY"

payload={'dual_channel': 'false',

'punctuate': 'true',

'webhook_url': '',

'speaker_diarization': 'false',

'language': 'enUs',

'custom': 'YOUR_VALUE'}

files=[

('audio',('audio_1.wav',open('audio.wav','rb'),'audio/wav'))

]

headers = {}

response = requests.request("POST", url, headers=headers, data=payload, files=files)

print(response.text)

curl --location --request POST 'https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY' \

--form 'audio=@"audio.wav"' \

--form 'dual_channel="false"' \

--form 'punctuate="true"' \

--form 'webhook_url=""' \

--form 'speaker_diarization="false"' \

--form 'language="enUs"' \

--form 'custom="YOUR_VALUE"'

// With our virlow-nodejs-async package

// https://www.npmjs.com/package/virlow-nodejs-async

// npm i virlow-nodejs-async

const { transcribe } = require('virlow-nodejs-async');

let options = {

apiKey: "YOUR_API_KEY", // Replace with your API key

storage: "local", // gcs => Google Cloud Storage, s3 => AWS S3, local => Local path

file: "YOUR_AUDIO_FILE", //

dualChannel: false, // true or false

language: "enUs", // Language of audio file, example: enUs

punctuate: true, // true or false

webhookUrl: "", // Enter your Webhook URL

speakerDiarization: false, // true or false

shortHandNotes: true, // true or false

tldr: true, // true or false

custom: "MY_VALUE" // Enter your custom value

};

transcribe(options).then(function (result) {

console.log(result.data);

console.log(`\nYour Job ID is: ${result.data.id}\n`)

});

Response Object

The response object will include your Job ID, as id. You will need to use the job id value to check the status of your transcription. Review the Getting the Transcription Result section in the docs to see how you can retrieve the results.

{

"id": "9f4e657e-9cd2-4b59-9397 e8d8c9956d5b",

"status": "queued",

"dual_channel": "false",

"punctuate": "true",

"webhook_url": "",

"custom": "YOUR_VALUE",

"speaker_diarization": "false",

"language": "enUs",

"timestamp_submitted": "2022-04-15T13:47:12.720Z",

"tldr": "true",

"short_hand_notes": "true"

}

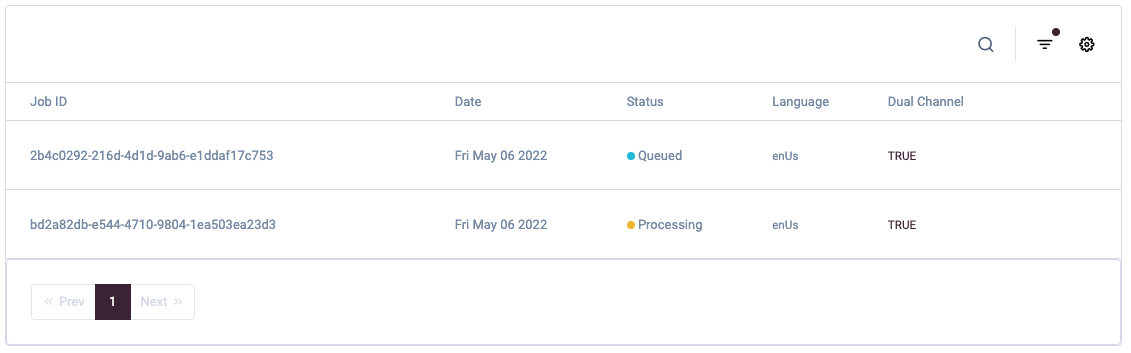

You can also view the status of your job in the Virlow Console. Navigate to Jobs, and you will be able to see up to 90 days of jobs submitted to the Virlow API.

Fala Voice Job Logs

Dual-Channel Request

The code snippets below show how to submit your audio/video file to the API for transcription. After submitting your POST request, you will get a response that includes an id key, status message, and any additional data you posted.

The status key shows the status of your transcription. It will start with "queued," then go to "processing," and finally to "completed."

The id key is the unique id of your transcription job, which you can poll the API for when the job has been completed.

The code sample is for a dual-channel (stereo), for this we pass a true value for the dual_channel key.

var axios = require('axios');

var FormData = require('form-data');

var fs = require('fs');

var data = new FormData();

data.append('audio', fs.createReadStream('audio.wav'));

data.append('dual_channel', 'true');

data.append('punctuate', 'true');

data.append('webhook_url', '');

data.append('speaker_diarization', 'false');

data.append('language', 'enUs');

data.append('short_hand_notes', 'true');

data.append('tldr', 'true');

data.append('custom', 'YOUR_VALUE');

var config = {

method: 'post',

url: 'https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY',

headers: {

...data.getHeaders()

},

data : data

};

axios(config)

.then(function (response) {

console.log(JSON.stringify(response.data));

})

.catch(function (error) {

console.log(error);

});

Unirest.setTimeouts(0, 0);

HttpResponse<String> response = Unirest.post("https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY")

.field("file", new File("audio.wav"))

.field("dual_channel", "true")

.field("punctuate", "true")

.field("webhook_url", "")

.field("speaker_diarization", "false")

.field("language", "enUs")

.field("short_hand_notes", "true")

.field("tldr", "true")

.field("custom", "YOUR_VALUE")

.asString();

import requests

url = "https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY"

payload={'dual_channel': 'true',

'punctuate': 'true',

'webhook_url': '',

'speaker_diarization': 'false',

'language': 'enUs',

'short_hand_notes': 'true',

'tldr': 'true',

'custom': 'YOUR_VALUE'}

files=[

('audio',('audio.wav',open('audio.wav','rb'),'audio/wav'))

]

headers = {}

response = requests.request("POST", url, headers=headers, data=payload, files=files)

print(response.text)

curl --location --request POST 'https://api.voice.virlow.com/v1-beta/transcript?x-api-key=YOUR_API_KEY' \

--form 'audio=@"audio.wav"' \

--form 'dual_channel="true"' \

--form 'punctuate="true"' \

--form 'webhook_url=""' \

--form 'speaker_diarization="false"' \

--form 'language="enUs"' \

--form 'short_hand_notes="true"' \

--form 'tldr="true"' \

--form 'custom="YOUR_VALUE"'

Response Object

The response object will include your Job ID, as id. You will need to use the job id value to check the status of your transcription. Review the Getting the Transcription Result section in the docs to see how you can retrieve the results.

{

"id": "9f4e657e-9cd2-4b59-9397 e8d8c9956d5b",

"status": "queued",

"dual_channel": "true",

"punctuate": "true",

"webhook_url": "",

"custom": "YOUR_VALUE",

"speaker_diarization": "false",

"language": "enUs",

"timestamp_submitted": "2022-04-15T13:47:12.720Z",

"tldr": "true",

"short_hand_notes": "true"

}

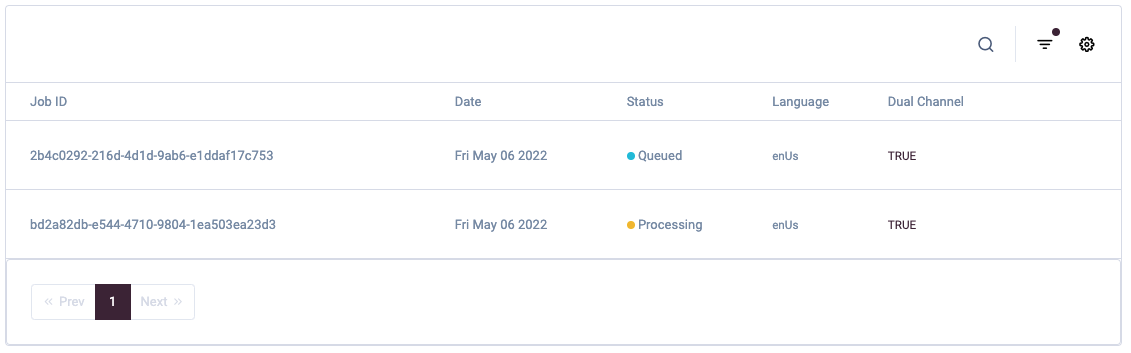

You can also view the status of your job in the Virlow Console. Navigate to Jobs, and you will be able to see up to 90 days of jobs submitted to the Virlow API.

Fala Voice Job Logs

Parameters

dual_channel

Suppose you have a dual-channel audio file, for example. In that case, a phone call recording with the agent on one channel and the customer on the other, the API supports transcribing each channel separately.

Simply include the dual_channel parameter in your POST request when submitting files for transcription, and set this parameter to true.

punctuate

To punctuate the transcription text and case proper nouns, simply include the punctuate parameter in your POST request when submitting files for transcription, and set this parameter to true.

webhook_url

Instead of polling for the result of your transcription, you can receive a webhook once your transcript is complete or if there was an error transcribing your audio file.

The ability to use a webhook to retrieve your transcription will be introduced in our v1.1.0 Civet release.

speaker_diarization

The Virlow API can automatically detect the number of speakers in your audio file, and each word in the transcription text can be associated with its speaker. Simply include the speaker_diarization parameter in your POST request, and set this to true.

language

Our initial release of the Virlow API will only accept English US for your submitted audio files. Please review the Roadmap for upcoming language support.

tldr

TLDR is a common abbreviation for "Too Long Didn't Read." Setting the tldr parameter to true will summarize the transcript processed with the Fala Voice TLDR AI model. The full transcript will also be included in the result object.

short_hand_notes

Short Hand Notes converts your transcript to shorthand notes providing you additional analytics for your audio files. Setting the short_hand_notes parameter to true will add Short Hand Notes to the transcript processed with the Virlow Short Hand Notes AI model. The full transcript will also be included in the result object.

custom

You can also include a custom value associated with your transcription job. Simply have the custom parameter in your POST request when submitting files for transcription, and set this parameter to YOUR_VALUE.